Why feedback loops are important

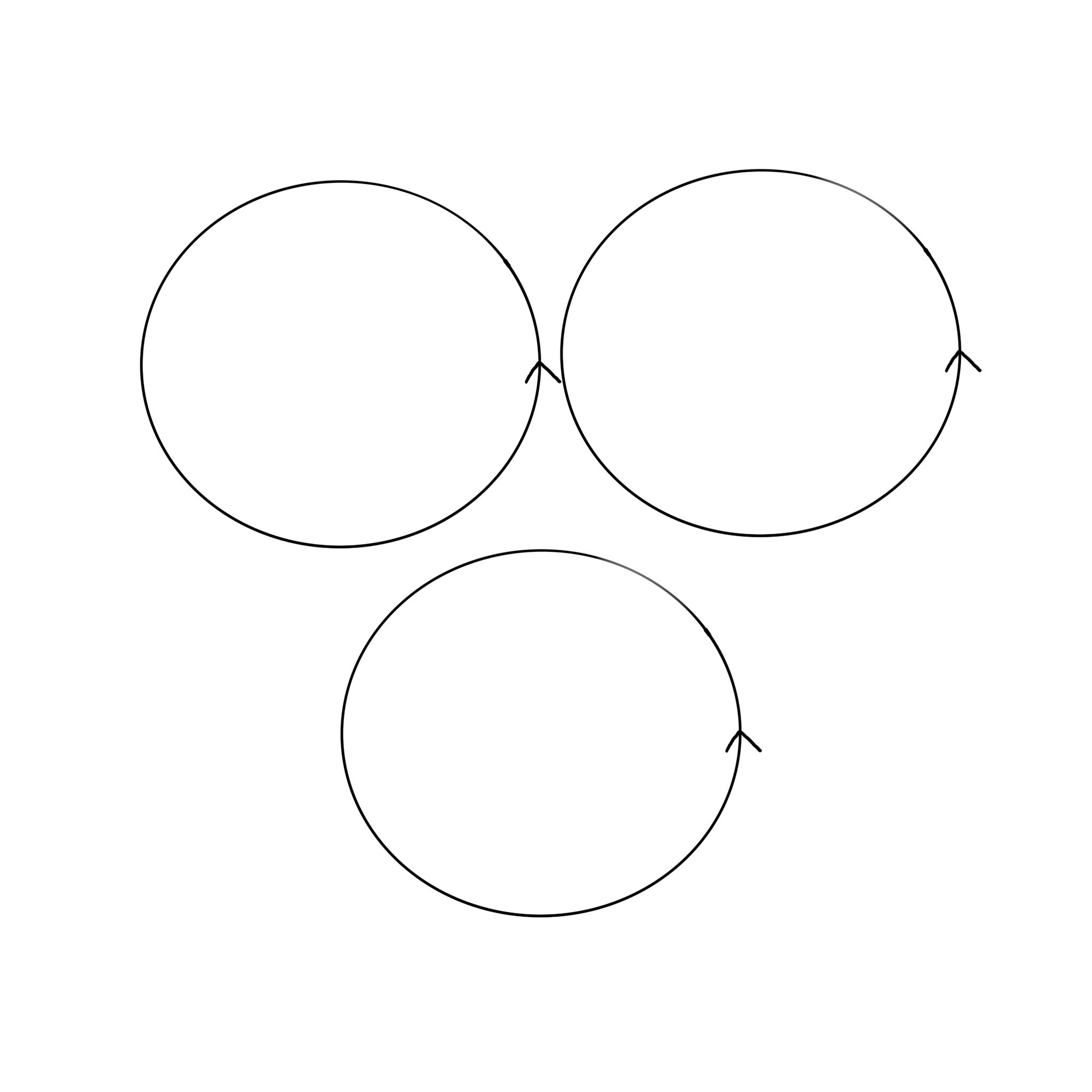

For your software product to win in the market, it is crucial to be able to build and release products quickly and confidently. To achieve this, we need fast feedback loops at every stage, from the feedback your linter/unit tests provide when you are writing the code, to the validation checks that occur before you can merge your code into the mainline branch, and then to releasing it to customers.

If your development process lacks a tight loop or misses certain validation checks at any point, it can lead to hours of lost time.

What does great look like?

- As you write code and hit save, the relevant unit tests and linters run on your file/module -- this is your extremely fast validation

- As you push your code up to a remote, various longer validation tests are run -- this should be 'relatively' quick, say < 20s, and can be opted out of

- As you merge your code into the mainline branch, all of the checks from 1/2 are validated, plus a set of more extensive tests

- Your code is then automatically released to production

- You have a comprehensive set of alarms. If there are any issues that you were unable to capture from your tests, you are then alerted and can quickly roll forward or back

Why does this approach work really well? Because we run the inexpensive tests frequently, right as the developer makes the change, we run the slower, more limited tests later down the line. The important part is that these slower-style tests should not be testing behaviour, but simply verifying that all the various components can work together in a smoke-style test.

My project is massive, and it's not possible for these to be fast

All of what I said is great in theory, but in practice, the systems that people work on are potentially massive, and it may not be easy to get fast feedback. Here are some strategies.

1. Have strong system boundaries

The most important part of fast feedback is to limit the scope of what needs to be tested when a change occurs. This is absolutely critical.

The simplest case to think about is microservices in their own repository. If any file changes within that repository, then only those repository's changes need to be tested. To ensure that we do not break any consumers of our microservice, we have tests that validate that we have not broken any contracts.

Microservices are easy to think about because there is a very clear boundary (the repository), and typically they never get big enough where you need to be 'too smart' about which tests you need to run and when. Though, as with anything, there is no free lunch. The main benefit of microservices (separation) is also its Achilles heel; it becomes really hard to share common code. A change could involve updating multiple repositories, sequencing deployments between microservices correctly, and other similar tasks. It then also becomes difficult to know which versions of services are compatible with each other.

A potentially better approach to this problem is a mono-repo. Basically, the idea is that you have independently deployable services, though they all exist within the same repository. This potentially resolves many of the issues with sharing and compatibility. Due to having the whole repo available to you, you are able to use an IDE's refactoring tools to do repo-wide changes / validate incompatibilities (remember fast feedback loops). A change / refactor can now exist as a single commit across multiple services, allowing for a common set of shared utilities that every service can use, etc. Although again, there is no free lunch, this comes with some drawbacks too. Now, whenever a common library changes, every service may need to be redeployed; you also need really good tooling to detect which service has changed (see change detection below).

My belief is that the mono-repo is the best solution, as its problems can be more easily solved with good tooling, though, if your team is unable or unwilling to invest in that tooling, then the repo boundary is better than re-running everything all the time.

2. Have change detection / incremental deployment

When working within a team, you will likely encounter numerous system components that are constantly changing. This means that when you pull down someone else's code, you should be able to immediately update your development environment (this will be true for both mono-repos and microservices). To do this quickly and effectively, you need to know what has changed. This can be achieved in two ways: one is through Git tagging, and the other is by storing a build hash.

For the Git tag, the idea is that when you deploy your local infrastructure, you tag it with your current commit. When you pull down code from the remote, your tools can then do a git diff <tagged-infra-commit> --name-only to see what has changed since then. If no files have been touched, then this code is considered 'current'.

For the build hash, typically, your infrastructure will handle this, say, via incremental compilation. Though you could build your own basic hash by taking all of your source files, generating hashes for them (including any kind of lock file), and then comparing that against one that has been deployed.

A nice aspect of this change detection/incremental deployment approach is that it should work on your development environment and any type of CI environment. Achieving the goal of, if your code does not change, then you should not need to deploy anything.

3. Unit and Integration Tests

Many people debate the definitions of a unit test and an integration test. To me, a unit test is a test that is scoped to an individual file/module, and an integration test is something that brings together multiple files/modules. The unit test should be located within or beside the file, and the integration test should be located outside of the module structure.

When we take this approach, we can make the unit test feedback loop extremely fast. This is because when we make a change, we can then locate the corresponding test file and run all the associated tests. This can be done using a file system watcher.

Integration tests are a little trickier; they should either be run on commit or when the user attempts to push those changes to the remote. What is really important here is that users have a way to opt out of these tests, such as if they want to push something up to show someone else.

4. Smoke tests

Smoke tests / running on cloud infrastructure are critical to having confidence that a given change will not break something in production. Although these tests are typically the slowest, we only want to run them when necessary. Once we can use our change detection, it becomes easy to identify which tests need to be run on the PR. Basically, we can identify the services that differ between our branch and the mainline, and then we would only need to run the tests for the changes. It is absolutely critical that these tests do not test functionality; they should be testing 'does that happy path for the service run on cloud infra', that is it.

If a smoke test detects an issue, that is undesirable, and it illustrates a slow feedback loop. If this happens, we should then attempt to integrate that change into the unit/Integration test.

5. Ensuring that all tests can be run on your local machine

It is a significant anti-pattern for tests to be runnable only on CI infrastructure (or have some kind of different CI-based configuration). All the CI infrastructure should be doing is calling into the same Docker container/infra that you would be running locally. By ensuring consistency, you can easily make changes to your tests and then validate them locally without needing to push them to CI.

6. Measuring your feedback loops

Over time, as your project becomes sufficiently complex, it is easy for a developer to accidentally introduce a change that slows down certain tests. It is also really hard to detect when this happens, OR what caused it. As part of your test infrastructure, you should report test run time and track it over time. This makes it easy to identify (and hopefully fix) a change that caused the tests to slow down.

Conclusion

Having fast feedback loops is not something you get for free; it requires a lot of thought and design. Once you work on a system where you have the ability to move quickly, you are significantly more productive.